Who knew Minecraft offered such a rich training ground for AI and machine learning algorithms? Earlier this month, Facebook researchers posited that the hit game’s constraints make it well-suited to natural language understanding experiments. And in a newly published paper, a team at Carnegie Mellon describe a 130GB-734GB corpus intended to inform AI development — MineRL — that contains over 60 million annotated state-action pairs (recorded over 500 hours) across a variety of related Minecraft tasks, alongside a novel data collection scheme that allows for the addition of tasks and the gathering of complete state information suitable for “a variety of methods.”

“As demonstrated in the computer vision and natural language processing communities, large-scale datasets have the capacity to facilitate research by serving as an experimental and benchmarking platform for new methods,” wrote the coauthors. “However, existing datasets compatible with reinforcement learning simulators do not have sufficient scale, structure, and quality to enable the further development and evaluation of methods focused on using human examples. Therefore, we introduce a comprehensive, large-scale, simulator paired dataset of human demonstrations.”Recommended videosPowered by AnyClipHope for quadriplegia patients in pilot with video gamersPauseUnmuteCurrent Time 0:03/Duration 0:57Loaded: 20.14% FullscreenUp Next

For those unfamiliar, Minecraft is a voxel-based building and crafting game with procedurally created worlds containing block-based trees, mountains, fields, animals, non-player characters (NPCs), and so on. Blocks are placed on a 3D voxel grid, and each voxel in the grid contains one material. Players can move, place, or remove blocks of different types, and attack or fend off attacks from NPCs or other players.

As for MineRL, it includes six tasks with a variety of research challenges including multi-agent interactions, long-term planning, vision, control, and navigation, as well as explicit and implicit subtask hierarchies. In the navigation task, users have to move to a random goal location over terrain with variable material types and geometries, while in the tree chopping objective, they’re tasked with obtaining wood to produce other items. In another task, players are instructed to produce objects like pickaxes, diamonds, cooked meat, and beds, and in a survival task, they must devise their own goals and secure items to complete those goals.

Each trajectory in the corpus consists of a video frame from the player’s point-of-view and a set of features from the game-state, namely player inventory, item collection events, distances to objectives, and player attributes (health, level, achievements). That’s supplemented with metadata like timestamped markers for when certain objectives are met, and by action data consisting of all of the keyboard presses, changes in view pitch and yaw caused by mouse movement, click and interaction events, and chat messages sent.

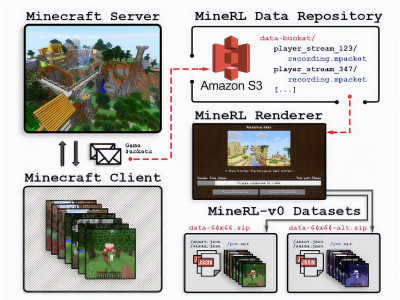

To collect the trajectory data, the researchers created an end-to-end platform comprising a public game server and a custom Minecraft client plugin that records all packet-level communication, allowing the demonstrations to be re-simulated and re-rendered with modifications to the game state. Collected telemetry data was fed into a data processing pipeline, and the task demonstrations were annotated automatically.

The researchers expect MineRL will support large-scale AI generalization studies by enabling the re-rendering of data with different constraints, like altered lighting, camera positions, and other video rendering conditions and the injection of artificial noise in observations, rewards, and actions. “We expect it to be increasingly useful for a range of methods including inverse reinforcement learning, hierarchical learning, and life-long learning,” they wrote. “We hope MineRL will … [bolster] many branches of AI toward the common goal of developing methods capable of solving a wider range of real-world environments.”